K8S: Network Policy

To control traffic stream at the IP address or port level (OSI layer 3 or 4), then, at that point, you should seriously think about utilizing Kubernetes Network Policies for specific applications in your group. Network Policies are an application-driven development that permits you to determine how a unit is permitted to speak with the different organization “elements/services/endpoints”.

Network programs(Policy) are Kubernetes resources that control the business between capsules(Pods) and/ or network endpoints. They use labels to handpick capsules and specify the business that is directed toward those capsules using rules. utmost CNI plugins support the performance of network programs, still, if they don’t and we produce a Network Policy, also that resource will be ignored.

The most popular CNI plugins with network policy support are

Weave

Calico

Cilium

Kube- router

Romana

With Network Policy we can add business restrictions to any number of named capsules, while other capsules in the namespace( those that go unselected) will continue to accept business from anywhere.

The Network Policy resource has obligatory fields analogous to API Version, kind, metadata and spec.

Its spec field contains all those settings which define network restrictions within a given namespace

pod Selector selects a group of capsules for which the policy applies

policy Types define the type of business to be confined( inbound, outbound, both)

ingress includes inbound business whitelist rules

egress includes outbound business whitelist rules

Let's go into further detail to analyze basic network policies,

DENY all traffic to a namespace

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: denyall

namespace: default

spec:

podSelector: {}

policyTypes:

- Ingress

- Egress

we define both resource ingress and egress but didn't mention whitelist rules that result into to block traffic for the default namespace.

We can define allow all traffic to a namespace which overrides deny all rules that we define above.

ALLOW all traffic to a namespace

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-all

namespace: default

spec:

podSelector: {}

policyTypes:

- Ingress

- Egress

ingress: {}

egress: {}

let us go further into how we can isolate the namespace in K8S with network policy,

ISOLATE Namespace

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: isolate-namespace

namespace: default

spec:

podSelector: {}

policyTypes:

- Ingress

- Egress

ingress:

- from:

- namespaceSelector:

matchLabels:

nsname: default

egress:

- to:

- namespaceSelector:

matchLabels:

nsname: default

as per this policy, pods in the default namespace are isolated and they can communicate with pods in the same namespace having the label nsname=default.

Now, we are going to do some hands-on. for a demo, we have set up Kubernetes on AWS eks.

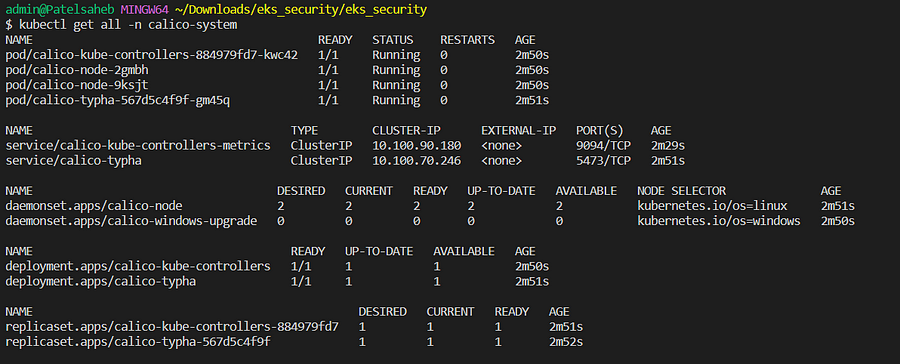

EKS by default doesn't support Network Policy. So here we will use the calico addon.

Few considerations while using the calico network policy,

Not support with Amazon EKS FARGATE

add rules in iptables on nodes that have the highest priority

use with IPV4 Family only not with IPV6 EKS cluster

How to setup EKS Cluster please check the below link,

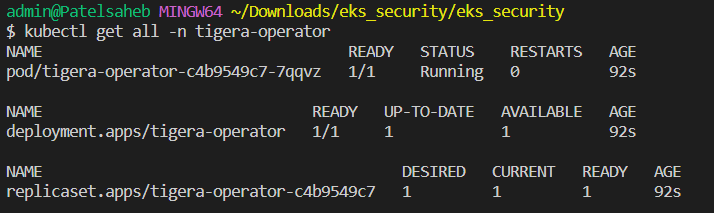

let's connect the EKS cluster and apply the calico policy,

helm repo add projectcalico https://docs.projectcalico.org/charts

helm repo update

helm install calico projectcalico/tigera-operator --version v3.21.4

then,

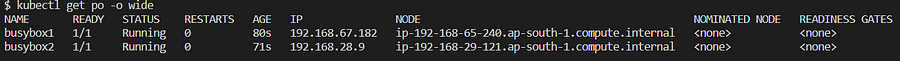

Let's deploy some test pods,

kubectl run busybox1 --image=busybox -- sleep 3600

kubectl run busybox2 --image=busybox -- sleep 3600

By default, pods can communicate with each other without any issues.

kubectl exec -it busybox2 -- ping -c3

lets setup a deny-all policy,

cat << EOF > deny-all.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: deny-all

namespace: default

spec:

podSelector: {}

policyTypes:

- Ingress

- Egress

EOF

kubectl create -f deny-all.yaml

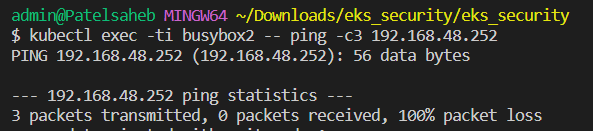

now check again ping,

let's configure a policy that allows certain labels,

cat << EOF > allow-out-to-in.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-out-to-in

namespace: default

spec:

podSelector: {}

ingress:

- from:

- podSelector:

matchLabels:

name: out

egress:

- to:

- podSelector:

matchLabels:

name: in

policyTypes:

- Ingress

- Egress

EOF

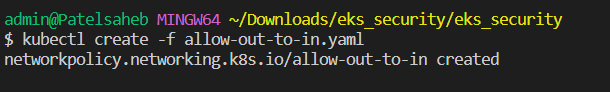

kubectl create -f allow-out-to-in.yaml

let's isolate all pods in the default namespace,

allow traffic having label name=out,

receive incoming traffic having label name=in

kubectl label pod busybox1 name=in

kubectl label pod busybox2 name=out

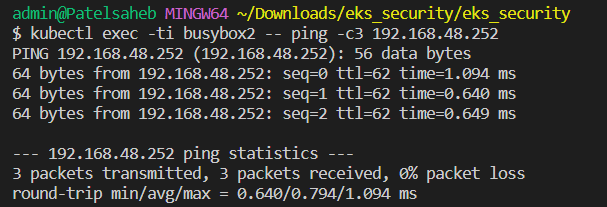

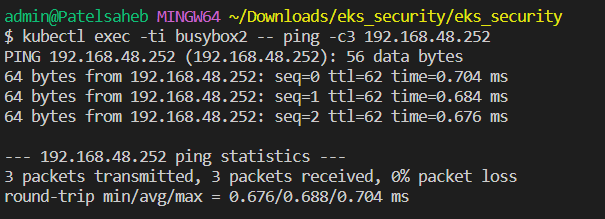

Now we can ping,

kubectl exec -ti busybox2 -- ping -c3 192.168.48.252

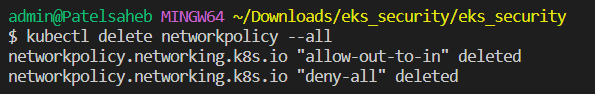

let's clean our all policies which we applied earlier,

kubectl delete networkpolicy --all

The Kubernetes Networking Model guarantees that all Kubernetes capsules on a Kubernetes Cluster are suitable to communicate, that is, suitable to change dispatches.

still, the Networking Semantics change in the presence of Networking programs from dereliction Allows for all capsules to dereliction Deny for named capsules with Explicit Allow.